Science / Tech

The Future Is Already Here

One can still sell people smartwatches, and create “new needs” for people who have already established lives of comfort.

There are not many ways to cause a stir in the classroom as an engineering professor, but one of them is surely to stand in front of a room full of bright-eyed, up-and-coming engineering students and inform them that “innovation, as we popularly understood it, is essentially dead.” Nevertheless, that is what I’ve taken to doing each year. Yes, I always put qualifiers on that statement (which you can perhaps catch a whiff of in the statement itself). I have been delivering “the talk” to students for several years now, outlining exactly what I mean by it, and encouraging them to push back and generate rebuttals. I always frame it as a challenge, rather than as a pronouncement from up on the stage. I am usually unmoved by the returns.

It begins like this—I will ask students what inventions have been discovered in their 20-odd-year lifetimes that they feel have fundamentally changed how humans live. They get some space to think, but inevitably offer up their smartphones, at which point I nod, having begrudgingly accepted that device into my own pocket just a few years ago when informed that my simple flip-phone for making calls would no longer be supported. The students can then think of almost nothing to add to that. The silence is usually deafening. I pivot to a slide presentation of everything invented in another 20-year span of history, just 100 years prior. Plastic. The Model T. Movies. Zippers. The washing machine. The toaster. Neon. Radio. Escalators. Tractors. Safety razors. Air conditioning. Stainless steel. Sonar. Bras. Cellophane. Pyrex glass. Instant coffee. Aviation. Vacuum cleaners. Some students gaze at their darkened phones glumly, as if in consolation, while others seem to get where I’m coming from before I even start putting more numbers behind it.

My argument is not a novel one (it has been making the rounds, promoted in works like The Great Stagnation by Tyler Cowen on the economic side, and by high-profile figures like Peter Thiel), but I try to make it plain through a discussion of both popular culture and the broader scientific enterprise. We are, I argue, not in some era of fantastical innovation, nor are we really on the cusp of another. Rather, we have reached the end of one long and thrilling ride up the steep part of an S-shaped curve of technological development that kicked off with the steam engine and ended, surprisingly, jarringly, with the proliferation of the global Internet.

That last invention becomes a critical arrow in my argument’s quiver: The Internet itself, I argue, is a kind of meta-technology, one you would have expected to enable endless other technologies; it is a free-for-all of information which had previously been hoarded only in libraries, which compared to the ease of googling things, might as well have been guarded by fire-breathing dragons the way I talk about the old days of retrieving information from them. Researchers, who are now greater in number and more active than at any point in world history, would presumably use such a resource to explore the garden of ideas and unlock wondrous discoveries on a daily basis. Indeed, a quick check reveals that requests and issues for patents have grown noticeably since the mid-’90s, and PhDs have grown explosively since 2000 (fueled in part by the rise of China), with more academic research papers being published than ever (one 2016 estimate pegged it at about 2.3 million per year with a growth rate of 2.5 percent per annum).

So why, I ask, does a standard Western-world kitchen have no fundamentally new appliances in it since the 1970s? Instead of a fabulous new cooking device, we are now investigating how to connect our fridge to the Internet, presumably so that it can ping our phones when we’re out of hummus. “Why,” I ask plaintively, “is transportation getting us where we’re going no faster than in the ’70s?” Our attempts at supersonic passenger flights having gone nowhere practical, we instead tinker with replacing the driver of our car with a computer, which is meaningful from a safety perspective but hardly fundamental to getting around except for a few who cannot already drive. Why are our big, successful movies now mostly sequels, and our music industry mostly recycling beats and melodic ideas, kept truly “innovative” only through an occasionally unexpected fusion? Have we simply picked all the low-hanging fruit (as Cowen argues in his book’s subtitle)?

If you are following my argument, you will realize here that my definition for “innovation” is not “a somewhat bigger or smaller or faster phone,” or “a new gadget finding a niche market,” but that I am setting the bar pretty high—to look like those breakthroughs from the period surrounding the early 20th century. It would mean something new that is eventually in everyone’s home, because to not have one has been rendered folly or a mark of impoverishment. That is where I define my terms here, and I am contending that short of a few potential surprises, we’re mostly looking at the finished product of human tech.

The defense of innovation

I am, of course, out on a limb here. The historical road to this essay is riddled with people proclaiming something to the effect of “we’ve just about wrapped this thing up.” From the book of Ecclesiastes proclaiming “there is no new thing under the sun” to record producer Dick Rowe choosing not to award a contract to The Beatles because “groups of guitars are on their way out,” to the apocryphal 1985 comment attributed (perhaps falsely) to Bill Gates suggesting that 640k of computer memory “ought to be enough for anyone,” it is often plainly foolish to underestimate the future.

There are any number of ways to rebut my seemingly pessimistic line of argument, and although I find none of them fully persuasive, they should be aired at this point. The first is a sort of breathtaking accounting of the speed of “innovation and disruption,” and for an example of this I will turn to what was printed in a Bloomberg piece by Barry Ritholtz in 2017:

Consider a simple measure of the time it takes for a new product or technology to reach a significant milestone in user acceptance. The Wall Street Journal noted it took the landline telephone 75 years to hit 50 million users… it took airplanes 68 years, the automobile 62 years, light bulbs 46 years, and television 22 years to hit the same milestones… YouTube, Facebook and Twitter hit that 50-million user mark in four, three and two years, respectively.

But upon closer inspection, this furious new technological pace is an argument that actually supports rather than undercuts my position. It is incontrovertible that we can now disseminate something more rapidly than ever—a case in point being the vaccine for the SARS-CoV-2 virus, the distribution of which is an unprecedented feat playing out in real time as this is being written. Surely this sort of rapid dissemination (to say nothing for the vaccine development itself) is an astonishing innovation? But this is like lauding a new 10-lane superhighway in a world with few cars. What it actually does, like that seldom-traveled highway, is put in stark relief our failure to generate those new paradigm-changing gadgets and send them zipping into people’s houses. Any household-changing discovery on the order of the common household microwave oven would have blazed across the planet by now rather than plodding along as in the days before Netflix. Instead, we now have some Roomba-adopters out there, somewhere.

This argument also can be leveraged against the second objection I often encounter, which goes like this: “How can we know what invention has been imagined in the past 20 years that will soon change our lives forever? It sometimes takes a while for an invention’s true value to become apparent.” I’ve had some of the keener students offer this up in class, and I generally nod along because I can think of examples of unexpected technological spin-offs (indeed, I sleep on one each night, owing my Tempur-Pedic mattress to its origins in research in space travel). But then I return to thinking about how fast dissemination is now, and the sheer volume of high-hanging fruit-pickers there are, goaded on by investors and profitable opportunity, clambering up the ladders to get the bits of fruit that remain on the increasingly bare trees.

The garden of innovation is flooded with people poking and shaking each tree as never before, producing a record number of patents and corresponding economic activity. Still, we are a long way from the days of the lone researcher toiling away in their basement on some earth-shattering development like the home computer. As noted in 2017 by a research group from the National Bureau of Economic Research:

Our robust finding is that research productivity is falling sharply everywhere we look. Taking the US aggregate number as representative, research productivity falls in half every 13 years—ideas are getting harder and harder to find. Put differently, just to sustain constant growth in GDP per person, the US must double the amount of research effort searching for new ideas every 13 years to offset the increased difficulty of finding new ideas.

“But what about my phone,” you might ask, “isn’t that a show-stopper?” That of course depends on whether you consider “increased convenience” to be meaningful innovation—long before it became familiar in my pocket, I was already on screens all day, usually sitting comfortably in a chair, whereas now I can be on screens doing all the same things I was already doing, but now while walking between locations. Screens, of course, can now be on my wrist (thanks to Apple), or, increasingly, attached to my face (thanks to Google and its ill-fated investigation into a face-hugging glasses-phone nobody wanted). What about my household TV, which can now be in 3D… wait, never mind, since that suffered a colossal market failure. Now it can be a curved screen, offering increases in sharpness that cannot be detected by humans… I’ll pass, thanks. I have my eye instead on a fifth PlayStation generation, sporting graphics that look more or less identical to the previous model except to a certain kind of visual technophile, with technology having brought us to a sort of end-of-history as far as realism in visual presentations is concerned.

“Still,” you argue, undeterred, “a revolution could be just around the corner.” Indeed, I do still light up when I consider a few sectors of technology where I think some real breakthroughs are possible. I never fail to mention to my students that people are still working on problems in energy and in medicine that could become true game-changers for civilization. But even these sorts of innovations may end up changing the way we live only behind the curtain—a clean energy source would be terrifically meaningful from a global climate perspective, and a medicinal breakthrough may offer genuine relief for any number of conditions, but as far as changing “how most people live out their home lives” there appears to be little on the horizon.

Implications

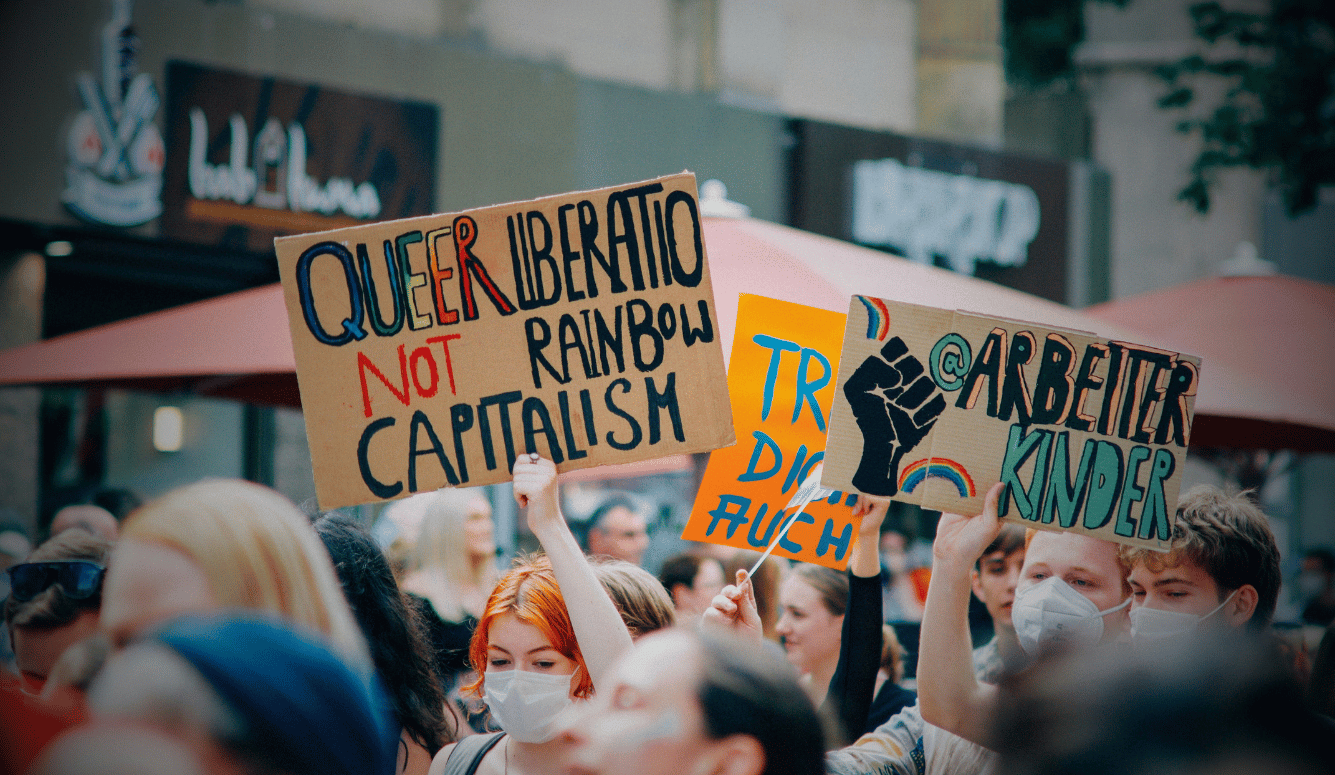

So why should anybody trot out this idea, and what would it mean in terms of how humanity understands itself? For a start, I think we’ve already sort of absorbed this idea—the battle to persuade people that the future looks a lot like the present is over, and you can see it in our films, most of which now are more interested in exploring various possible failures to the system rather than improvements to it. Writing for the Guardian in 2014, Damien Walter put it like this: “The once optimistic vision of competent men tinkering with the universe has been replaced with science gone awry—killer viruses, robot uprisings and technocratic dystopias reveling in the worst of our possible futures.” We’ve already sifted from Star Trek what was possible (the tablet and phone) from what was absurd (the transporter). We already know the flying cars from The Jetsons are more trouble than they are potentially worth, more of a terrifying liability over our heads than a technological inevitability. Our science fiction now is more Children of Men than 2001: A Space Odyssey, where the future is identified by the louder, brighter advertising on more surfaces.

And yet, as I tell my engineers, we have not run out of problems to solve. There are other ‘I’ words that are no less worthy of our admiration and ambition than “innovation.” Consider instead “iteration,” “improvement,” and “inspiration.” One can still sell people smartwatches, and create “new needs” for people who have already established lives of comfort. So what does the S-shaped curve and the (mostly-bare) trees of technological breakthroughs actually mean for us, as a species?

If I am to prognosticate, I would say it means a pivot away from a collective obsession with a technological “growth mindset” towards one of maintaining and safeguarding what we (wealthy, industrialized people) already have, while focusing more on helping others join the ranks of those fortunate citizens who enjoy high standards of living. Consider us in this scenario to already be the denizens of that shiny future we once saw in our science fiction films! If the world outside seems a little humdrum to you for being too familiar, then from where you are standing or sitting try to think of yourself as being part of some long-ago imagined future—marvel at how inconceivable all of the stuff around you would have been to one of your distant (or even not-so-distant) ancestors before humans clambered up the steep slope of the 20th century.

Then, imagine some wild technologies that may yet be in our own future (brain-interfaced computing, a personal holodeck, a sock that never gets holes in it) and remember that (apart from areas like medicine and climate-mitigation) these technologies aren’t things we really need anymore, but represent things that would perhaps be “nice to have.” Our early 20th-century predecessors were, after all, reporting somewhat higher levels of life satisfaction than us despite many lacking so much of our modern conveniences. My advice in this context is this: Manage your expectations for the future, treasure the marvels of your modern environment, and may the plateau we’ve arrived at be a prosperous one we can someday extend to all persons.